ChatGPT: Optimizing Patient Care

The accomplishments of AI in the healthcare sector are impressive, but as AI continues to improve patient care and enhance provider skills, addressing its challenges will be critical.

- By Lee Warren

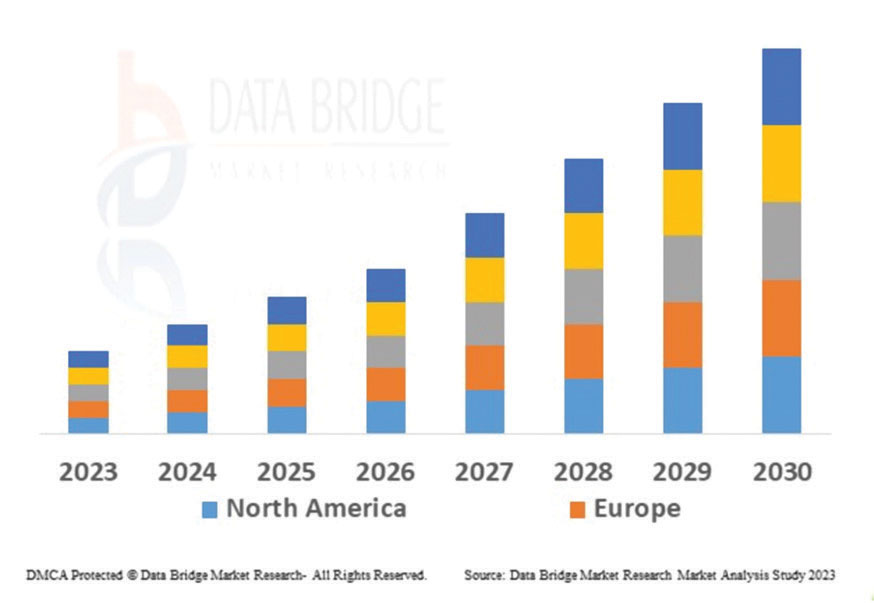

Artificial intelligence (AI) in healthcare is growing at an unprecedented rate (Figure 1). Since OpenAI’s ChatGPT (generative pre-trained transformer) was introduced to the world in November 2022, it has accomplished some amazing feats, including passing law exams at the University of Minnesota and the University of Pennsylvania’s Wharton School of Business,1 acing standardized tests and even helping to diagnose diseases that have sometimes baffled medical professionals.

Figure 1. Global AI Healthcare Market

Experts predict the global AI in healthcare market value will reach $272.91 billion by 2030.

Source: AI in Healthcare – Statistics and Trends. FreeAgent, March 15, 2023. Accessed at resources.freeagentcrm.com/ai-in-healthcare-statistics.

One such medical case occurred when a woman’s nanny told her that her 4-year-old son, Alex, needed Motrin every day before he could play, otherwise he had a meltdown. Over the next three years, Alex’s mom, Courtney, took him to a dentist and 17 different doctors to try to determine the cause of his pain but had no success. Frustrated, Courtney opened ChatGPT one night after an emergency room (ER) visit and fed the AI opensource platform everything she knew about her son’s symptoms, using data from her son’s medical charts. ChatGPT suggested her son had tethered cord syndrome. Courtney scheduled an appointment with a neurosurgeon and told her what she suspected. The neurosurgeon ordered an MRI, then confirmed the diagnosis, allowing Alex to undergo surgery to correct the condition.2

While impressive, ChatGPT has some limitations. Researchers from the Innovation in Operations Research Center at Mass General Brigham (MGB) trained ChatGPT on all 36 published clinical vignettes from the Merck Sharpe and Dohme clinical manual and compared its accuracy on differential diagnoses, diagnostic testing, final diagnosis and management based on patient age, gender and case acuity. They concluded that ChatGPT achieved a diagnostic accuracy rate of 71.7 percent, comparable to human doctors in some scenarios. ChatGPT not only came up with possible diagnoses, but also made final diagnoses and care management decisions.3 It demonstrated the highest performance in making a final diagnosis with an accuracy of 76.9 percent and the lowest performance in generating an initial differential diagnosis with an accuracy of 60.3 percent.4 As such, physicians will continue to play a critical role in interpreting ChatGPT data as they seek the best possible care for their patients.

“ChatGPT struggled with differential diagnosis, which is the meat and potatoes of medicine when a physician has to figure out what to do,” said Marc Succi, MD, associate chair of innovation and commercialization and strategic innovation leader at Mass General Brigham and executive director of its MESH Incubator’s Innovation in Operations Research Group, in a statement. “That is important because it tells us where physicians are truly experts and adding the most value — in the early stages of patient care with little presenting information, when a list of possible diagnoses is needed.”3

How Is AI Trained?

AI systems work by using algorithms and data. Data is collected and applied to mathematical models, or algorithms, which then use the information to recognize patterns and make predictions. Once algorithms have been trained, they are deployed within various applications where they continuously learn from and adapt to new data. This allows AI systems to perform complex tasks such as image recognition, language processing and data analysis with greater accuracy and efficiency over time.5

Beyond assisting with diagnoses, there are many more ways ChatGPT can benefit everyone involved in the healthcare process.

Minimizes Wait Times

Humber River Hospital in Toronto was the first in Canada to use AI to track and control patient flow. It is also being used there to analyze medical images to expedite pathology assessments, and it is being used to assist with making faster and more accurate diagnoses. What’s more, AI is allowing doctors to obtain a more comprehensive understanding of patients’ mental health by gathering feedback and following trends.6

In one study, ChatGPT-4 provided imaging recommendations and generated radiology referrals using clinical notes from ER cases. After evaluation by experts based on clarity, clinical relevance and differential diagnosis, the chatbot responded in approximately 95 percent of the cases, using the American College of Radiology Appropriateness Criteria (ACR AC) as the reference standard. More specifically, two radiologists compared ChatGPT’s responses to the ACR AC, giving the chatbot mean scores of 4.6 and 4.8 (out of 5.0) for clarity, 4.5 and 4.4 for clinical relevance and 4.9 (from both readers) for differential diagnosis.7 This reduces the likelihood of unnecessary or incorrect imaging, helps radiologists understand the clinical context better and can expedite diagnosis and treatment for patients.

Assists Patient Understanding

The 21st Century Cures Act requires all providers to release medical notes to patients, but sometimes the clinical notes provided by doctors can be confusing. Health tech company Vital launched an AI-powered doctor-to-patient translator that turns medical terminology into plain language at a fifth-grade reading level, according to Aaron Patzer, co-founder and CEO at Vital.8 This not only benefits patients, but clinicians as well, since they will have fewer patients confused by their notes.

Patients can also use other large language models (LLMs) such as ChatGPT to find clarity in the notes provided by their physicians. In a Yale University study involving 750 radiology reports, four different LLMs were tested — including ChatGPT, Bard and Bing — and found that all four were able to significantly simplify report impressions for patients with simple prompts such as, “I am a patient. Simplify this radiology report” and “Simplify this radiology report at the seventh grade level.”9

In another study, 15 radiologists were tasked with rating the quality of simplified radiology reports by ChatGPT with respect to their factual correctness, completeness and potential harm for patients. The Likert scale analysis and inductive free-text categorization were used to assess the quality of the simplified reports. Most of the 15 radiologists agreed that the simplified reports were factually correct, complete and not potentially harmful to patients. One of the study’s concluding points was that LLMs such as ChatGPT have vast potential to enhance patient-centered care in radiology and other medical domains, while still needing supervision by medical experts.10

Improves Efficiency

Improved efficiency is especially true as it relates to telehealth where it can provide preliminary consultations, gather patient history, schedule virtual appointments, transcribe patient conversations and general visit summaries, and offer initial medical advice, improving access to healthcare and reducing the burden on healthcare professionals. ChatGPT can also analyze vast amounts of medical literature and patient data to assist medical professionals in interpreting test results.11

Patients can use ChatGPT for real-time information about drugs, including side effects, proper dosage, administration, storage of medication, interactions and potential contraindications. The AI tool also provides potential alternatives for patients who are allergic or intolerant to specific prescriptions, and healthcare providers can use it to stay informed about new medications, drug recalls and other important updates in the pharmaceutical industry.12

ChatGPT may also offer nurses on-demand access to recent research and recommendations for remote patient monitoring, as well as offer support and guidance in real-time during physical checkups of patients. This may allow nurses to make better judgments and offer better care.13

Useful for Medical Training

Medical students at NYU Grossman School of Medicine are using ChatGPT to curate educational resources based on the diagnoses of patients being treated. This AI-driven system links patient data with relevant learning materials such as infographics and the latest medical literature, delivering customized educational content to students. ChatGPT is also being used to create virtual patients for training purposes, allowing students to practice interviewing patients and diagnosing conditions with feedback provided by AI.14

“I think in the future, we’re going to see medical education models that have AI as a copilot sitting next to the student, sitting next to their faculty and coaches, providing guidance and advice along the way, curating curricula and assessments, and […] at the level of that individual student, tailoring what they learn and how they learn so that they make the absolute best use of their time,” said Marc Triola, MD, associate dean of education informatics and director of the Institute for Innovations in Medical Education at NYU Grossman School of Medicine.14

The benefit of ChatGPT goes beyond medical students: It can help provide additional training to doctors, too. Richmond University Medical Center conducted a proof-of-concept study that demonstrated how ChatGPT could teach physicians how to break bad news to patients. The study found that using the AI tool to role-play can be beneficial in improving communication skills in high-stress situations.15 The process uses a standardized framework for breaking bad news known as SPIKES (Setting up, Perception, Invitation, Knowledge, Emotions with Empathy, and Strategy or Summary), and then the chatbot grades physicians on how well they did.

Drawbacks and Concerns

As mentioned, ChatGPT won’t replace medical professionals. Instead, it will assist them in offering the highest quality of care possible. The use of AI technology in healthcare does come with some concerns, though.

“Hallucinations” are near the top of the list. IBM defines hallucinations this way: “AI hallucination is a phenomenon wherein a large language model (LLM) — often a generative AI chatbot or computer vision tool — perceives patterns or objects that are nonexistent or imperceptible to human observers, creating outputs that are nonsensical or altogether inaccurate.”16 ChatGPT comes with a disclaimer for every user: “ChatGPT can make mistakes. Check important info.” As such, even though the AI tool has proven to be effective when it comes to diagnoses, medical education, streamlining the healthcare process and patient understanding, it is not a substitute for proper medical oversight.

Privacy is also a concern. Healthcare institutions manage these concerns through rigorous data privacy measures and compliance with legal standards such as Health Insurance Portability and Accountability Act in the United States and General Data Protection Regulation in the European Union. They do so by using encryption and anonymization to protect patient data during collection, transmission and storage. They also implement robust access controls such as role-based permissions and two-factor authentication to ensure only authorized personnel can access sensitive information. Stanford Medicine uses a framework called ACCEPT-AI (Age, Communication, Consent and assent, Equity, Protection of data, and Technological considerations) to handle sensitive data, especially pediatric data, ethically. This framework guides researchers and clinicians in addressing consent and data protection issues.17

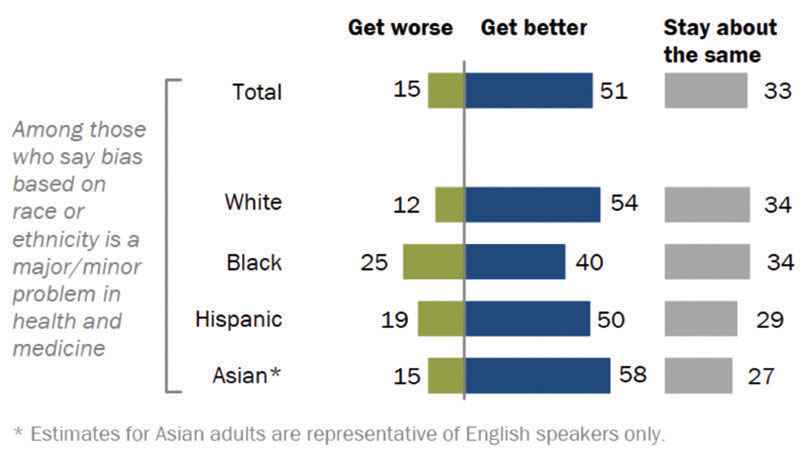

Finally, biases and fairness are a concern. AI models can inherit biases present in the training data, leading to unequal treatment of patients based on race, gender, socioeconomic status or other factors, which can exacerbate existing health disparities (Figure 2). In 2023, the Agency for Healthcare Research and Quality and the National Institute on Minority Health and Health Disparities convened a panel to examine and address the impact of healthcare algorithms on racial and ethnic disparities in healthcare. This resulted in five guiding principles for mitigating and preventing racial and ethnic bias in healthcare algorithms.18 And, the Cleveland Clinic has an AI task force that evaluates algorithms for quality, ethics and bias to mitigate health disparities and ensure responsible AI use.19 These types of guidelines and oversights of AI models will be crucial moving forward to ensure equitable output.

Figure 2. Ethnic Biases in Healthcare

Fifty-one percent of U.S. adults who say ethnic biases in healthcare are a problem believe AI will reduce bias.

Source: AI in Healthcare – Statistics and Trends. FreeAgent, March 15, 2023. Accessed at resources.freeagentcrm.com/ai-in-healthcare-statistics.

The Future of AI

As ChatGPT and other LLM technologies advance, they are expected to significantly enhance diagnostic accuracy and support personalized medicine by analyzing extensive patient data more effectively. Advancements in AI-driven medical training and virtual simulations will continue to refine the skills of healthcare professionals.

However, addressing ethical and regulatory challenges will be crucial. Ensuring data privacy, minimizing biases and maintaining the integral role of human expertise in patient care will need to remain key priorities. As AI tools evolve, ongoing collaboration between technologists, healthcare providers and policymakers will be essential to fully realize their benefits while mitigating risks. But with proper oversight, it can be used as a tool of great significance in facilitating better health.

References

1. Moore, S. What Does ChatGPT Mean for Healthcare? Accessed at www.news-medical.net/health/What-does-ChatGPT-mean-for-Healthcare.aspx.

2. Holohan, M. A Boy Saw 17 Doctors Over 3 Years for Chronic Pain. ChatGPT Found the Diagnosis. Today, Sept. 11, 2023. Accessed at www.today.com/health/mom-chatgpt-diagnosis-pain-rcna101843.

3. Fox, A. ChatGPT Scored 72% in Clinical Decision Accuracy, MGB Study Shows. Healthcare IT News, Aug. 29, 2023. Accessed at www.healthcareitnews.com/news/chatgpt-scored-72-clinical-decision-accuracy-mgb-study-shows.

4. Rao, A, Pang, M, Kim, J, et al. Assessing the Utility of ChatGPT Throughout the Entire Clinical Workflow: Development and Usability Study. Journal of Medical Internet Research, 2023 Aug;25. Accessed at www.jmir.org/2023/1/e48659.

5. Glover, E. What Is AI, Why It Matters, How It Works. Built In, April 2, 2024. Accessed at builtin.com/artificial-intelligence.

6. Mazza, G. Canada’s Mental Health Crisis. CENGN, Aug. 22, 2022. Accessed at www.cengn.ca/information-centre/innovation/artificial-intelligence-ai-and-the-future-of-mental-health.

7. Murphy, H. ChatGPT Excels at Radiology Referrals in Emergency Departments. Health Imaging, July 12, 2023. Accessed at healthimaging.com/topics/health-it/enterprise-imaging/chatgpt-excels-radiology-referrals-emergency-departments.

8. Landi, H. Mint.com Founder’s Health Tech Company Launches AI Tool to Translate Medical Jargon for Patients. Fierce Healthcare, Aug. 8, 2023. Accessed at www.fiercehealthcare.com/health-tech/mintcom-founders-health-tech-company-launches-ai-tool-translate-medical-jargon.

9. Ridley, EL. Large Language Models Simplify Radiology Report Impressions. Aunt Minnie, March 26, 2024. Accessed at www.auntminnie.com/imaging-informatics/artificial-intelligence/article/15667295/large-language-models-simplify-radiology-report-impressions.

10. Jeblick, K, Schachtner, B, Dexl, J, et al. ChatGPT Makes Medicine Easy to Swallow: An Exploratory Case Study on Simplified Radiology Reports. Imaging Informatics and Artificial Intelligence, 2023 Oct;34:2817-2825. Accessed at link.springer.com/article/10.1007/s00330-023-10213-1.

11. ChatGPT in Healthcare: Top Benefits, Use Cases, and Challenges. Matellio, March 4, 2024. Accessed at www.matellio.com/blog/chatgpt-use-cases-in-healthcare.

12. Marr, B. Revolutionizing Healthcare: The Top 14 Uses of ChatGPT In Medicine and Wellness. Bernard Marr and Co., March 15, 2023. Accessed at bernardmarr.com/revolutionizing-healthcare-the-top-14-uses-of-chatgpt-in-medicine-and-wellness.

13. Sharma, M, and Sharma, S. A Holistic Approach to Remote Patient Monitoring, Fueled by ChatGPT and Metaverse Technology: The Future of Nursing Education. Nursing Education Today, December 2023. Accessed at www.sciencedirect.com/science/article/abs/pii/S0260691723002666.

14. ChatGPT in Medical Education: Generative AI and the Future of Artificial Intelligence in Health Care. American Medical Association, Feb. 23, 2024. Accessed at www.ama-assn.org/practice-management/digital/chatgpt-medical-education-generative-ai-and-future-artificial.

15. Webb, JJ. Proof of Concept: Using ChatGPT to Teach Emergency Physicians How to Break Bad News. Cureus, 2023;15(5). Accessed at www.cureus.com/articles/154391-proof-of-concept-using-chatgpt-to-teach-emergency-physicians-how-to-break-bad-news#!.

16. What Are AI Hallucinations? Accessed at www.ibm.com/topics/ai-hallucinations.

17. Hadhazy, A. Stanford Ethicists Developing Guidelines for the Safe Inclusion of Pediatric Data in AI-Driven Medical Research. Stanford University Human-Centered Artificial Intelligence, Sept. 6, 2023. Accessed at hai.stanford.edu/news/stanford-ethicists-developing-guidelines-safe-inclusion-pediatric-data-ai-driven-medical.

18. Chin, MH, Afsar-Manesh, N, Bierman, AS, et al. Guiding Principles to Address the Impact of Algorithm Bias on Racial and Ethnic Disparities in Health and Health Care. JAMA Network Open, 2023;6(12). Accessed at jamanetwork.com/journals/jamanetworkopen/fullarticle/2812958?utm_source=For_The_Media&utm_medium=referral&utm_campaign=ftm_links&utm_term=121523.

19. Siwicki, B. Cleveland Clinic’s Advice for AI Success: Democratizing Innovation, Upskilling Talent and More. Healthcare IT News, March 22, 2024. Accessed at www.healthcareitnews.com/news/cleveland-clinics-advice-ai-success-democratizing-innovation-upskilling-talent-and-more.